The advent of digital communication and the exponential growth of internet traffic have necessitated strong infrastructure to handle, mediate, and secure data flow between servers and clients. Of these technologies, proxies are extremely influential.

They are infrastructure sites, usually devalued, which serve as middlemen in digital communication. Though their structure and purpose are highly technical, their effect is deeply architectural—affecting how information is routed, examined, modified, and eventually sent via the internet.

In order to fully comprehend proxies in all their intricacy, one must think not only about what proxies are, but also how they operate within contemporary digital systems, why they are employed at various layers of a network, and what structural consequences arise from their use.

This means exploring the technical configuration of proxies, their deployment processes, how they interact with client-server architecture, and the network motifs that they naturally produce.

The Functional Core of Proxies

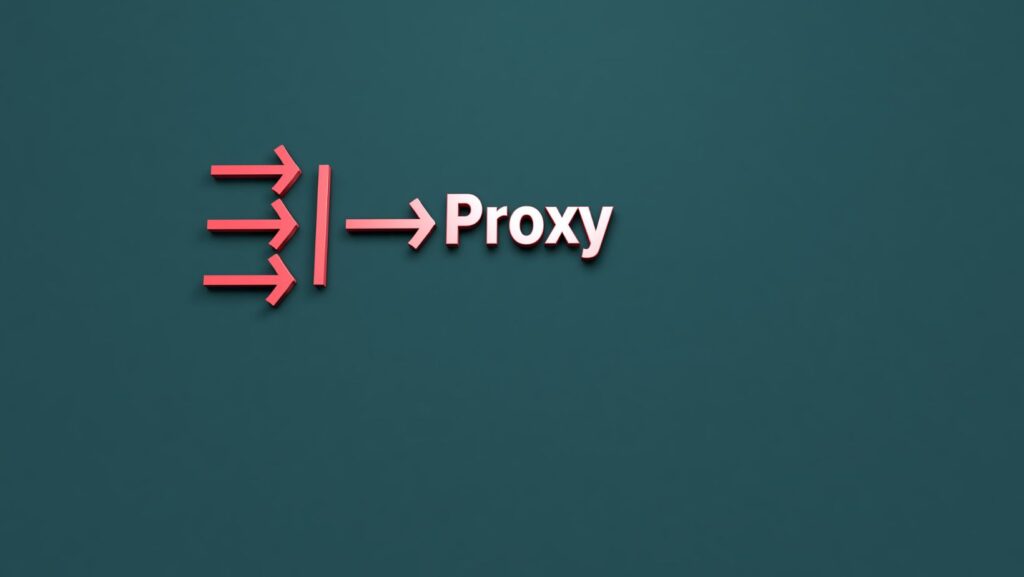

A proxy at its simplest form is an intermediary server on behalf of a client making a request to a server that possesses the requested resource.

Rather than the client making a direct communication with the destination server, the proxy intercepts the request, processes it, and perhaps makes modifications to it, then forwards it to either the original server or another proxy server.

The server’s response then travels back via the reverse path through the proxy to be received by the client. Instead of using an app, there is also an option of using web-based proxy services, such as a nebula proxy.

This intermediary function allows the proxy to perform a number of operations: traffic filtering, enforcing access control, caching of the content, anonymization of client requests, load balancing, and protocol translation.

The idea is that a proxy abstracts and intermediates the communication path, placing itself between two points and hence gaining partial or full control of the session flow.

Proxies in Network Architecture

In actual network architecture, proxies are used for one or more architectural reasons. Forward proxies, being closer to the client, are primarily used to manage outbound access and control data flows leaving a controlled environment, such as a corporate LAN.

Their primary function is to enable organizations to enforce internet usage policy, store high-demand content for improved performance, and provide the internal devices with another layer of obfuscation.

On the other side, reverse proxies are positioned near the origin servers, and their purpose is targeted at incoming traffic.

They serve as the first point of contact for incoming requests, where they can spread traffic among backend servers, close SSL/TLS sessions to clear up cryptography processing, store static content in caches, and even scan for application-layer security policy in requests.

Reverse proxies are building blocks of content delivery networks (CDNs) and are critical in today’s high-availability and high-performance web systems.

Apart from these, there are also transparent proxies, which can function without the need for client-side setup. These are usually used in ISP-level traffic shaping or in enterprise settings where enforcement of network policy needs to take place without direct end-user involvement.

Systemic Consequences and Behavioral Patterns

Proxies always have systemic effects, such as latency, observability, control granularity, and boundaries of trust. By their very nature, proxies add a network hop, which adds latency. But through the application of clever caching and routing, this overhead may be overcome or even turned upside down into a performance gain.

From a security standpoint, proxies are double-edged swords. Their ability to inspect and manipulate traffic enables them to enforce draconian security controls, block malicious content, and isolate sensitive backend infrastructure from public view.

But this same ability makes proxies themselves valuable targets and potential carriers of traffic tampering or data eavesdropping.

Operational behavior also follows from the use of proxies. Network traffic is easier to predict and monitor in centralized proxy scenarios, which is beneficial for performance optimization as well as security monitoring.

Use of centralized proxies, however, can also be a source of single points of failure and requires careful architectural thinking so that redundancy and scalability are ensured.

Actors and Stakeholders Affected

The effect of proxy deployment varies significantly depending on the actor. Network administrators have greater visibility and control over traffic so that they can impose corporate policy more effectively and spot anomalous behavior.

System architects gain modularity and scalability, particularly in web services where reverse proxies can handle complexity of backend service orchestration.

End-users themselves will see mixed outcomes. In some use cases, proxies improve performance through caching of content and optimization of routing. In others, they can introduce apparent latency or interfere with end-to-end encryption, particularly in SSL interception.

Privacy implications are also critical since proxies are exposed to both user communications’ content and metadata, and thus transparent and ethical operation is critical.

For application developers, proxy presence alters assumptions about network path properties as well as client IP visibility. This has an immediate impact on application-level functionalities like geo-location, rate-limiting, and session management of users.

The developers therefore must design systems that are proxy-aware, typically by relying on standardized headers like X-Forwarded-For to access original client information.

Architectural Solutions and Patterns

Proxy technologies have become advanced platforms that are highly sophisticated, with most of them having other capabilities like Web Application Firewalls (WAFs), API gateways, and observability tools.

Technologies such as NGINX, HAProxy, Envoy, and Traefik offer robust reverse proxy capability, expanding on the traditional routing functionality with advanced layer 7 processing techniques, including request transformation, authentication, and dynamic routing.

More recently, the service mesh model, represented by Istio and Linkerd, has propagated the concept of the proxy to the microservices world. In a service mesh, small proxies (typically named sidecars) execute alongside each copy of a service and intercept all traffic going in and out.

This allows for fine-grained control of inter-service communication, including mutual TLS, traffic shaping, retries, circuit breaking, and telemetry collection.

In these new-generation architectures, proxies are no longer mere traffic relays but programmable control points placed everywhere in the infrastructure. They establish a fabric of intermediation, where policy and telemetry are continually enforced and collected across all services, regardless of underlying application code.

Conclusion

The role of proxies in today’s digital infrastructure is essential and ubiquitous. As mediators of network communication, they enable essential capabilities that make performance, security, scalability, and observability feasible.

Their deployment decides system behavior at scale, dictates architectural styles, and transforms trust boundaries in enterprise and internet-scale contexts.

It takes not technical sophistication but architectural insight to understand proxies. They are not simply a tool but an inherent part in the architecture of networked systems. While digital communication keeps growing and diversifying, proxies will continue to be at the center of it all—quiet, calculated, and structurally necessary.